Natural sound representation in the spatio-temporal cerebral network: Bridging acoustics, behaviour, and semantics in the real world

Project participants:

- Bruno L. Giordano (Institut de Neurosciences de La Timone – INT, UMR 7289, CNRS and Aix-Marseille University, Marseille, France);

- Daniele Schön and Benjamin Morillon (Institut de Neurosciences des Systèmes – INS, UMR1106, Inserm and Aix-Marseille University, Marseille, France);

- Thierry Artières (Laboratoire d’Informatique et Systèmes – LIS, UMR 7020, CNRS and Aix-Marseille University, Marseille, France);

- Elia Formisano (Department of Cognitive Neuroscience, Faculty of Psychology and Neuroscience, Maastricht University, Maastricht, the Netherlands);

- Robin Ince (Institute of Neuroscience and Psychology, University of Glasgow, Glasgow, United Kingdom)

Project abstract: Identifying objects in the real world is a key function of the auditory system, and is fundamental to our well-being (e.g., do I hear a baby crying or a man screaming? Did the glass bounce or break?). Our brain achieves this feat by transforming what we hear into representations that, stage after stage, tune progressively onto relevant aspects of the sound source (e.g., categories, identity of the sound source). Many hypotheses have been advanced to account for the representation of natural sounds in the brain (acoustic features, semantic attributes and, as of lately, deep neural networks), but little work has been made to contrast them, assess their transfer and integration throughout the cerebral network, and detail their relevance to behaviour. We will address these issues within a multimodal neuroimaging study of natural sounds (3/7T fMRI; MEG; iEEG) that merges multivariate approaches, information theory, and deep learning within the same data-analytic framework. By doing so, we aim to develop a unified, domain-general model of acoustics and semantic processing that challenges current theoretical views while generalizing to all natural sounds, voices (speech included) and non-voices at large.

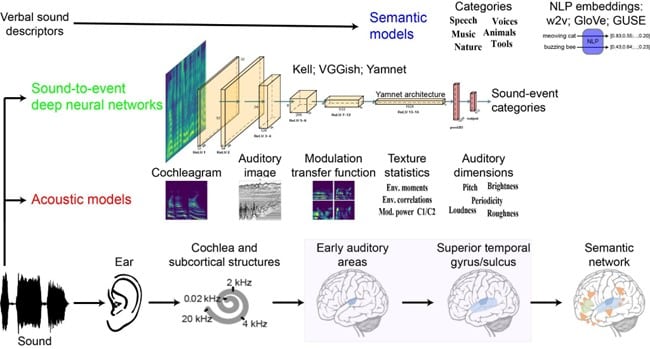

Project figure: Models_v01

Project figure caption: We will measure multimodal measures of the cerebral response to natural sounds (magnetoencephalography – MEG; high-field functional magnetic resonance imaging – 7T fMRI; intracranial electroencephalography – iEEG). We will investigate the representation of natural sounds, considering computational models from three classes: acoustic models of the heard sound, semantic models derived from the analysis of verbal sound descriptors; sound-to-event deep neural networks.

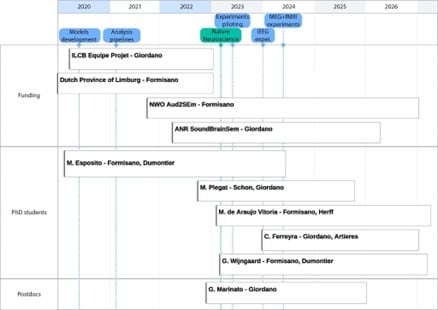

Project co-funding:

- ANR-21-CE37-0027 (SoundBrainSem to Giordano, Schön, Artières with Formisano and Ince as external collaborators);

- NWO 406.20.GO.030 (Dutch Research Council; Aud2Sem to Formisano, M. Dumontier and C. Herff with Giordano as external collaborator).

Project outputs:

- Giordano, B.L., Esposito, M., Valente, G., & Formisano, E. (2023). Intermediate acoustic-to-semantic representations link behavioral and neural responses to natural sounds. Nature Neuroscience, 26, 664–672. https://hal.science/hal-04065458v1

- Esposito, M., Valente, G., Plasencia-Calaña, Y., Dumontier, M., Giordano, B.L., & Formisano, E. (2023). Semantically-informed deep neural networks for sound recognition. In ICASSP 2023 – 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes, Greece. https://hal.science/hal-04476407

- Giordano, B.L., de Miranda Azevedo, R., Plasencia-Calaña, Y., Formisano, E., & Dumontier, M. (2022). What do we mean with sound semantics, exactly? A survey of taxonomies and ontologies of everyday sounds. Frontiers in Psychology, 13. https://hal.science/hal-03845726v1

Project, outputs, and ongoing work: The goal of this project is to ascertain the cerebral representations that make it possible to derive a semantic knowledge of objects and events in the auditory environment (e.g., baby crying, nailing hammer etc.). To detail the acoustic-to-semantic transformation in the human brain, we map a wide range of computational models (acoustics; natural language processing semantic models; deep neural networks that learn to recognize sound event attributes based on the input sound) onto multimodal cerebral response to natural sounds (magnetoencephalography – MEG; high-field functional magnetic resonance imaging – 7T fMRI; intracranial electroencephalography – iEEG). Current outputs directly related to this project include two journal papers (Frontiers, 2022; Nature Neuroscience, 2023), and 2 papers in conference proceedings (2023 IEEE International Conference on Acoustics, Speech and Signal Processing – ICASSP, 2023; 31st European Signal Processing Conference – EUSIPCO 2023). Further outputs aided by the ILCB support include two journal papers (Cerebral Cortex, 2023; Nature Human Behaviour, 2021). Ongoing work focuses on the collection of neuroimaging data (MEG, fMRI, iEEG), and on the development of neural network models and data analysis approaches.

Project publications:

- Giordano, B.L., Esposito, M., Valente, G., & Formisano, E. (2023). Intermediate acoustic-to-semantic representations link behavioral and neural responses to natural sounds. Nature Neuroscience, 26, 664–672. https://hal.science/hal-04065458v1

- Wijngaard, G., Formisano, E., Giordano, B.L., & Dumontier, M. (2023). ACES: evaluating automated audio captioning models on the semantics of sounds. In 31st European Signal Processing Conference (EUSIPCO2023). Helsinki, Finland. https://hal.science/hal-04476412v1

- Esposito, M., Valente, G., Plasencia-Calaña, Y., Dumontier, M., Giordano, B.L., & Formisano, E. (2023). Semantically-informed deep neural networks for sound recognition. In ICASSP 2023 – 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes, Greece. https://hal.science/hal-04476407

- Giordano, B.L., de Miranda Azevedo, R., Plasencia-Calaña, Y., Formisano, E., & Dumontier, M. (2022). What do we mean with sound semantics, exactly? A survey of taxonomies and ontologies of everyday sounds. Frontiers in Psychology, 13. https://hal.science/hal-03845726v1

PhD students and postdoctoral researchers:

- Michele Esposito (PhD student. Start: 2021. Supervision: Formisano and Prof. Michel Dumontier – Maastricht University – and Giordano; role: ANN development);

- Marie Plegat (PhD student. Start: 2022. Supervision: Schön, Giordano and Formisano; role: MEG acquisition/analysis);

- Giorgio Marinato (Postdoctoral researcher. Start: 2022. Supervision: Giordano and Formisano; role: MEG and iEEG acquisition/analysis);

- Maria de Araújo Vitória (PhD student. Start: 2023. Supervision: Formisano, Prof. Christian Herff – Maastricht University – and Giordano; role: fMRI and iEEG acquisition/analysis);

- Gijs Wijngaard (PhD student. Start: 2023. Supervision: Formisano and Dumontier; role: ANN development);

- Christian Ferreyra (PhD student. Start: 2024. Supervision: Artières and Giordano; role: MEG analysis and ANN development).

Project co-funding:

- ANR-21-CE37-0027 (SoundBrainSem to Giordano, Schön, Artières with Formisano and Ince as external collaborators);

- NWO 406.20.GO.030 (Dutch Research Council; Aud2Sem to Formisano, M. Dumontier and C. Herff with Giordano as external collaborator);

- Dutch Province of Limburg (Formisano).

Project timeline: Timeline_ILCB