Une syntaxe domaine-général utile à la motricité et au langage : exploration des effets d’imbrication syntaxique

Marie-Hélène Grosbras, Marie Montant & Raphaël Py (CRPN)

Journée d’étude doctorale « L’humain au centre des expériences sensorielles »

Salomé Sudre et al. (PRISM)

BrainHack Marseille 2026

Christelle Zielinski (LPL), Manuel Mercier (INS) & Matthieu Gilson (INT)

Colloque OPM 2025 — « optically pumped magnetometers »

Christian Bénar, Jean-Michel Badier & Victor Lopez-Madrona (INS)

Conférence PracticalMEEG 2025

Anne-Sophie Dubarry (CRPN), Clément François & Christelle Zielinski (LPL)

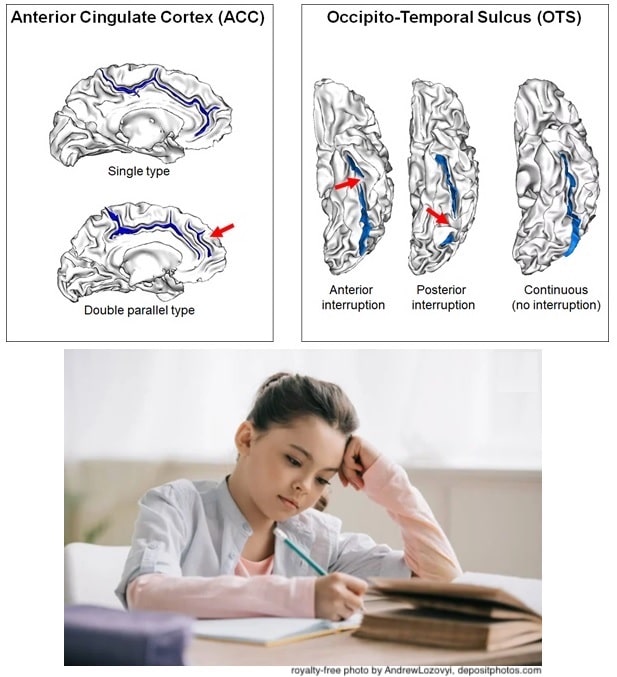

Sulcal Patterns Linked to Reading and Writing Skills

The folding patterns of various sulci were measured in structural MRI scans from children (ages 8–11) and adults (ages 20–40). In both groups, variations in the occipito-temporal sulcus were associated with reading and writing scores. Also in both groups, asymmetry of the anterior cinculate cortex was specifically linked to graphomotor performance, highlighting the role of this structure in writing development and practice. Sulcal patterns are established prenatally and remain stable over time. Thus, early brain development may contribute differences in literacy, alongside the neuroplastic effects of education, learning experiences, and socioeconomic factors.

The Sulcal Patterns of the Occipito-Temporal and Anterior Cingulate Cortices Influence Reading and Writing in Children and Adults.

2025. Cerebral Cortex 35 (6): bhaf145. — @HAL

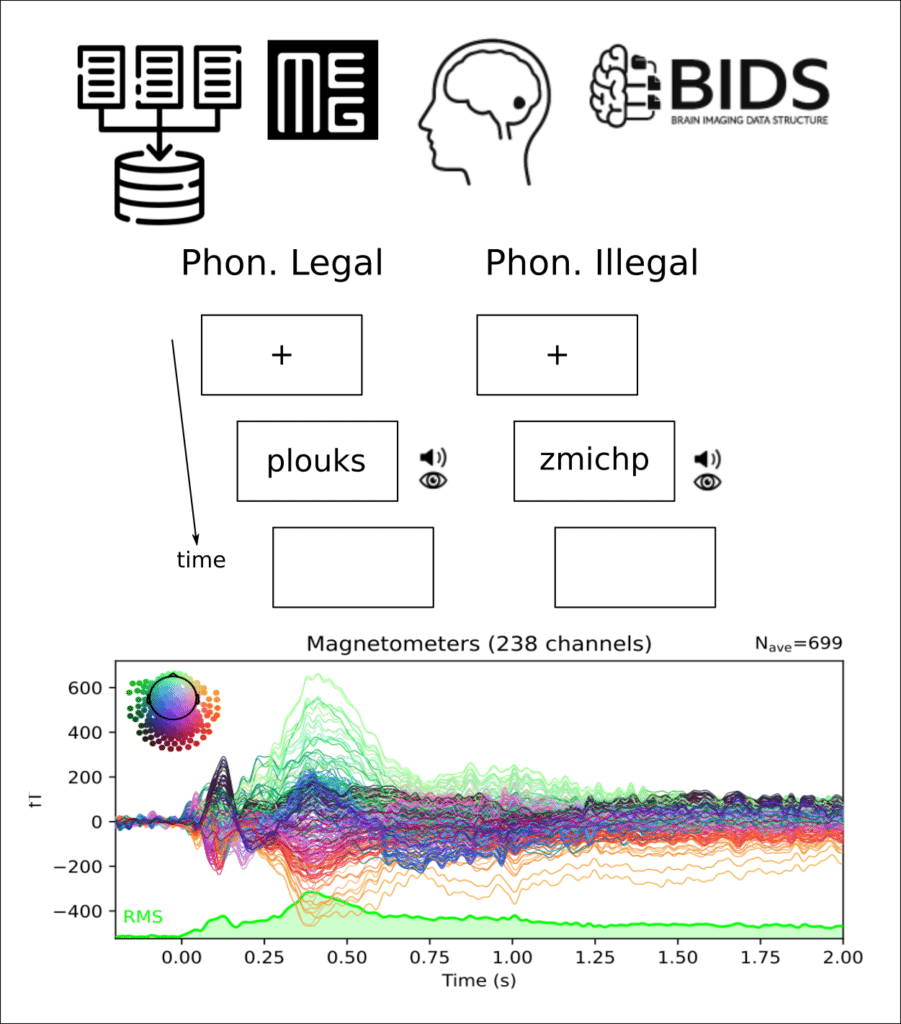

Production of Phonotactically Legal and Illegal Pseudowords

MEG-GLOUPS a curated dataset of raw magneto-encephalography (MEG) recordings from French speakers completing a pseudo-word learning task, along with resting-state recordings before and after the task. The seventeen participants pronounced visually and auditorily presented pseudo-words that followed or violated French phonotactic rules. The dataset adheres to the Brain Imaging Data Structure (BIDS) standard and includes basic preprocessing and quality checks. Comprehensive documentation covers the study’s rationale, design, data collection, and validation.

Valérie Chanoine, Snežana Todorović, Bruno Nazarian, Jean-Michel Badier, Khoubeib Kanzari, Andrea Brovelli, Sonja A. Kotz, and Elin Runnqvist.

Dataset for Evaluating the Production of Phonotactically Legal and Illegal Pseudowords

2025. Scientific Data 12 (1): 792. — @HAL

Effects of Daily Creative Writing Practice at School on the Cognitive Development of Children from Disadvantaged Socio-Economic Backgrounds

Cédric Hubert, Nathalie Bonnardel, and Aline Frey.

2025. Thinking Skills and Creativity, 58 :101881 — @HAL

The Role of Social and Emotional Experience in Representing Abstract Words

Daria Goriachun, Kristof Strijkers, Núria Gala, and Johannes C. Ziegler.

2025. Journal of Experimental Psychology: General, May. — @HAL