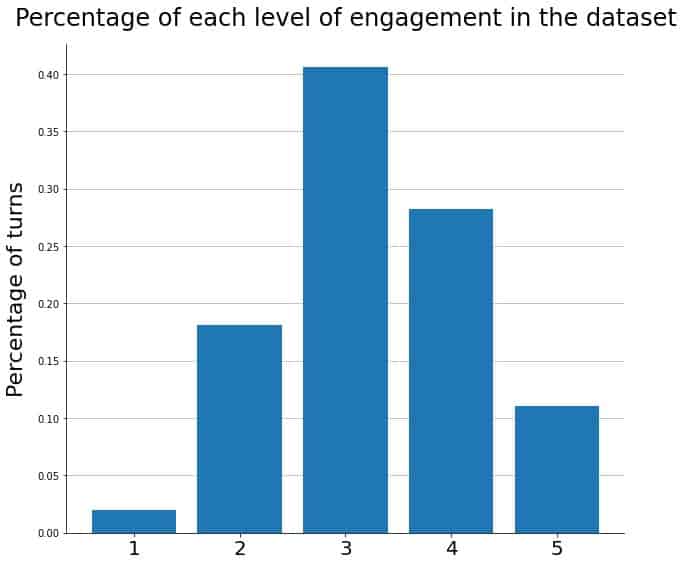

The engagement of participants varies a lot during a conversation, with direct consequences on the quality and the success of the interaction. How is this engagement implemented? We propose a new model of engagement based on a multimodal description encompassing as many cues as possible from prosody, gestures, facial expressions, lexicon, and syntax. We used classical machine learning algorithms to build different variants based on original datasets of natural conversations (figure). Different classification experiments were conducted, ranging from binary to 5-classes. Our results constitute the state-of-the-art in this task, applied on human-human conversations. They allow disentangling the respective contribution of each modality to conversational engagement.