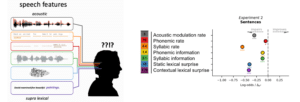

Humans are experts at processing speech, but how this feat is accomplished remains a major open question. We investigated how speech comprehension is determined by seven different linguistic features, ranging from acoustic modulation rate to contextual lexical information. All these features independently impact the comprehension of accelerated speech, with a clear dominance of the syllabic rate—the orange data-point on the figure. We also derived the channel capacity—i.e., the maximum rate at which information can be transmitted—associated with each linguistic feature. From these observations, we articulate an account of speech comprehension that unifies dynamical, informational and NLP frameworks.

Jérémy Giroud, Jacques Pesnot Lerousseau, François Pellegrino, and Benjamin Morillon. 2023.

The Channel Capacity of Multilevel Linguistic Features Constrains Speech Comprehension.