On the Acquisition of Typing Skills without Formal Training by School-Aged Children

Svetlana Pinet, Christelle Zielinski, F.-Xavier Alario, and Marieke Longcamp.

2025. Reading and Writing, November 25. — @HAL

Event‐Related Brain Potentials and Frequency‐Following Response to Syllables in Newborns and Adults

G. Danielou, E. Hervé, A. S. Dubarry, B. Desnous, and C. François.

2026. European Journal of Neuroscience 63 (3): e70418 — @HAL

Bridging the Communication Gap: Pragmatics and Interactional Dynamics in Deaf and Hard of Hearing Children

Chiara Mazzocconi, Céline Hidalgo, Charlie Hallart, Stéphane Roman, Roxane Bertrand, Leonardo Lancia, Daniele Schön

2026. Journal of Speech, Language, and Hearing Research, February 20, 1–38 — @HAL

Beyond Phonemic Awareness: The Alphabetic Principle Predicts Reading Acquisition in a Nationwide Longitudinal Study

Paul Gioia, Johannes C. Ziegler, and Jerome Deauvieau.

2026. Cognition 271 (June): 106457 — @HAL

Neural Correlates of Conversational Feedback

Philippe Blache, Deirdre Bolger, Mireille Besson, Auriane Boudin, and Roxane Bertrand.

2026. Language, Cognition and Neuroscience, January 27, 1–26 — @HAL

Opportunistic Observation of Long-Finned Pilot Whales Interacting with a Solitary Humpback Whale in the Gascogne Gulf (Northwest Atlantic)

Paul Best, Lise Habib-Dassetto, Jules Cauzinille, Thierry Legou, Fabienne Delfour, and Marie Montant.

2025. Aquatic Mammals 51 (6): 515–20. — @HAL

Traitement de Séquences dans des modalités visuo-phonologique et visuo-motrice

Simon Thibault, LPL & CRPN

Forum des Sciences Cognitives de Marseille

Association Les Neuronautes

Combining Spatial Wavelets and Sparse Bayesian Learning for Extended Brain Sources Reconstruction

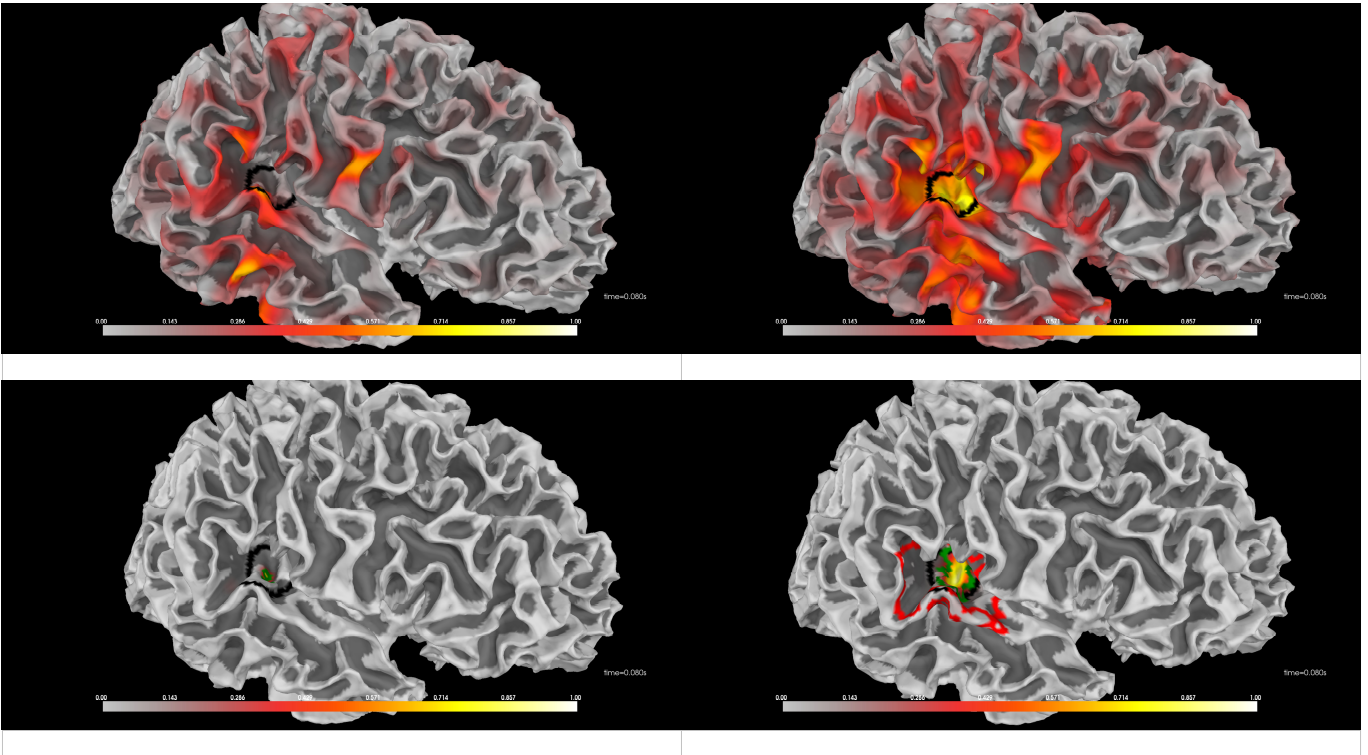

In the context of M/EEG source reconstruction, most distributed source models tend to strongly overestimate the spatial extent of brain activity and underestimate its depth and amplitude (Black contour line: boundary of the “aud-rh” region given by MNE-Python).

The top row shows the results of the MNE and eLORETA algorithms (implemented in MNE-Python with the default parameters), which suffer significantly from these limitations (although eLORETA manages to estimate depth). Sparse Bayesian Learning (SBL, bottom left) underestimates the spatial extent while accurately locating the activity. Combining SBL with spectral graph wavelets, as shown in the bottom right panel, correctly locates the activity (red and green lines are level curves corresponding respectively to 1% and 10% source amplitude levels), estimates its spatial extent and depth, and yields a quantitatively relevant amplitude estimate.

Combining Spatial Wavelets and Sparse Bayesian Learning for Extended Brain Sources Reconstruction

2025. IEEE Transactions on Biomedical Engineering, 1–12. — @HAL

Cognitive Engineering course

As part of the Cognitive Engineering course (UE Ingénierie Cognitive), MaSCo students—divided into five groups—designed and developed innovative projects based on the measurement of physiological or behavioral signals. These projects required skills in data analysis, experimental design, and the development of technological tools (sensors, virtual reality, etc.).

The final presentations took place on January 30, 2026. The event began with a guest lecture by Ana Zappa, former ILCB member now affiliated at Universitat de Barcelona. Ana presented her work on virtual reality, providing insights into the potential applications of immersive technologies in cognitive engineering.

Christelle, Ambre, Deirdre, & Thierry